The linear model with

more than one feature inputs are called multiple linear regression model.

Suppose we

have a univariate model with 0 intercepts and this model is given by

Y = X β + ε.

Hence for this model,

we find least square estimates and residuals. These values are given by

equations given below

In vector notation,

we define the inner product of two vectors as

< x,

y > = xTy (frequently

used in Quantum Mechanics). When inputs are orthogonal, these have no effect on

each other’s parameter estimates in the model.

Orthogonal

inputs occur most often with balanced, designed experiments (where

orthogonality is enforced), but almost never with observational data. Hence we

will have to orthogonalize them in order to carry this idea further. Suppose that

we have an intercept and a single input x. Then least squares coefficient of x

has the form

Where 1 = x0,

the vector of N ones. The above equation is the result of the simple regression. The

steps are:

(i)

Regress x on 1 to produce the residual

(ii)

Regress y on the residual z to give the

coefficient.

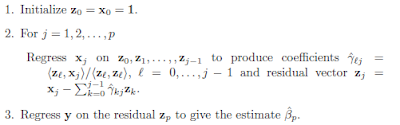

For multiple

regression, the algorithm will work like this.

The result of this

algorithm is

The

multiple regression coefficients represent

the additional contribution of xj on y, after xj has been adjusted for x0, x1, . . . , xj-1,xj+1, . . . , xp.

The algorithm discussed above is known as Gram –

Schmidt procedure for multiple regression. We can also represent step 2

of the algorithm in matrix form like

X = Z Γ, where Γ is an upper triangular matrix, and Z has a columns zj.

If I introduce diagonal matrix D with jth diagonal entry Djj

= || Zj ||, we get

X

= ZD-1D Γ = QR

This is called QR decomposition of X. Q is Nx(p+1) orthogonal matrix, QTQ

= I, and R is (p+1)x(p+2) upper triangular matrix.

No comments:

Post a Comment

If you have any doubt, let me know